Frequency tables are tables that shows how frequently various categories of categorical variables occur in data and how many different categories are there and which are those categories, it is also useful for classification to find the frequency of each category of label variable(column) . this help us to separate helpful categories from not so helpful categories.

suppose some category in categorical variable occurs just ones or twice then it is not going to be helpful from statistical point of view .

Lets see how to make frequency tables---

first i have downloaded auto_prices data set ,then i have taken out come categorical columns and created list of those columns ,this list along with dataset is passed to the count unique function . that function simply loop through each column in the list and counts number of times each unique value occurs in column and finally prints the same.

above code gives following frequency table---

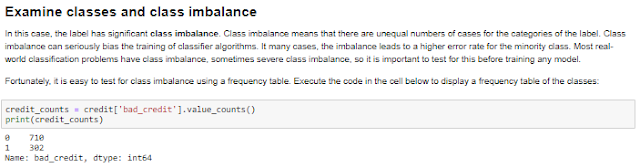

Examining classes and class imbalances----

Comments

Post a Comment